There are a plethora of frameworks used within the audio field from music CD pressing all the way to Broadcasting standards. I have chosen broadcasting as a basis for this research since it is well documented and is still extensively used worldwide. However, it is worth noting many media accessible regions follow different standards and use varying degrees of guidelines and laws to differentiate themselves from other regions that follow these practices.

A common practice used within the audio industry is the use compression to increased the “perceived loudness” of an audio track. Compression is commonly used in any professional sound recording and mastering studios whether it is via outboard gear or software, the principal doesn’t change. To sum it up, compression is used to increase the average level of sound, effectively amplifying quiet sounds and reducing loud sounds. On top of this, audio engineers are also forced to abuse peak limiting, clipping and the other tools within the dynamics family, not to make broadcasts sound better but to make it louder, thus more attention grabbing.

The concurrent problems with the compression of dynamics is the inconsistency between different broadcasters,labels and institutions of what is deemed acceptable and what governing frameworks are used for commercial purposes. The number one source of complaint from consumers the lack of uniform loudness when watching broadcasted content. Over the past the issue of sudden leaps in loudness between ads, station promos and TV programs startled viewers.

With a pocketful of technical jargons when handling audio levels, the three most used abbreviations are LKFS, LUFS and LU. These terms are basically similar and all aim to describe the same thing.

- 1 unit of LKFS (Loudness K-weighted Full Scale) = 1dB (US)

- 1 unit of LUFS (Loudness Units Full Scale) = 1dB (EU)

- 1 unit of LU (Loudness Units) = 1dB(International)

The difference in the usage of these terms is the localisation. Usually, target levels across the different broadcasting standards only differ marginally. The US for example, employs a target level of -24 LKFS whereas in Europe (EBU R128) the target level is -23 LUFS. However in order to aim for a more uniform number, a relative measure has been defined which is the LU. Regardless of whether it is -24 LKFS or -23 LUFS, the target level is both equivalent to 0 LU.

In Australia, it is common practice to follow an “Operational Practice” guideline for broadcasting standards. For the longest time, OP48 was used in Australia as a guideline for the measurement of sample peak measurements, which was the actual reading of the number of samples in an audio signal.

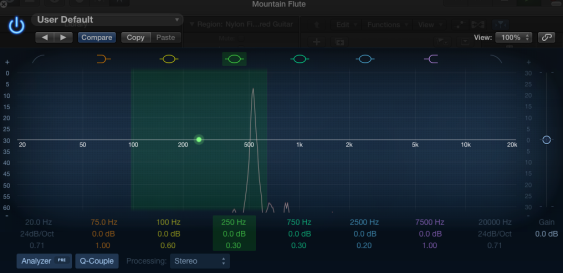

OP 48 is based on VU and Peak levels which is more of a measurement of the electrical signal of the audio rather then how loud the perceived audio actually sounds to the listener. Essentially, by slapping a multi-band compression and EQ you could make the sound seem louder while keeping in check with the OP48 requirements. Also it is important to keep in mind that equalisation does not take into the metering and so the boost of frequencies in which the ear is most sensitive to (1kHz to 5kHz) was not taken into consideration.

However as of January 1st 2013, A new OP 59 requirement came into effect in Australia and New Zealand to move Australia into line with the the US, Europe and many other countries in the world. This is a step further from the aforementioned OP 48 in the sense that audio will now be measured based on the average perceived loudness of the soundtrack rather than exploiting the use of signal processing. true-peak measurement was invented with this legislation and this measurement is considered to be the perceived loudness volume and has replaced the old sample peak measurements.

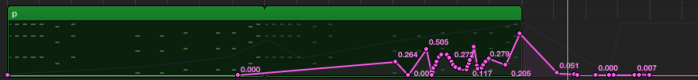

However, there is a system developed by The International Telecommunication Union or ITU which uses an algorithm that can measure a human’s perceived loudness. This system is commonly referred to as ITU-R BS.1770 and as of lately with the recent OP 59 requirement, the current implementation of this system is the ITU-R BS.1770-3. By using a meter that follows the ITU-R BS.1770-3 you can get a quantitative measure of the loudness of an audio track.

When taking music used within broadcasting into consideration,the music can vary in different styles and genres. In order to have a uniform level in sonically different genres such as Classical Music to Rock Music, is a tool used within broadcasting called “Gates”. A gate pauses the true-peak meters analysis when it hits below the threshold of -10LUFS.

All of these processes are definitely a step in the right direction for broadcasting standards and will hopefully address the issue of inconsistent levels between ads, station promos and TV programs. Since the use of true-peak mixing processes sound engineers can be more dynamic when creating soundtracks and that from now on there is more consistency in average loudness, regardless of the difference in regulations that are in use.

References

http://www.tcelectronic.com/loudness/loudness-explained/

http://www.sandymilne.com/op-59-and-loudness-standards-for-australian-tv/

http://op59.sounddeliveries.com/Info/FAQ

http://www.freetv.com.au/media/Engineering/Free_TV_OP59_Measurement_and_Managemnt_of_Loudness_for_TV_Broadcasting_Issue_2_December 2012.pdf